The Metaverse Will Engage All Five Senses

Contents

The expected rise of the metaverse and VR headsets

Even as virtual reality (VR) and augmented reality (AR) continue to grow and evolve, along comes an even more expansive concept: the metaverse. Where AR and VR are fundamentally visual in nature, we expect the metaverse to engage all our senses to make the blending of real and digital worlds an even richer experience.

Humans are visual creatures. VR and AR, fundamentally visual in nature, will be the foundational elements upon which the metaverse will be built.

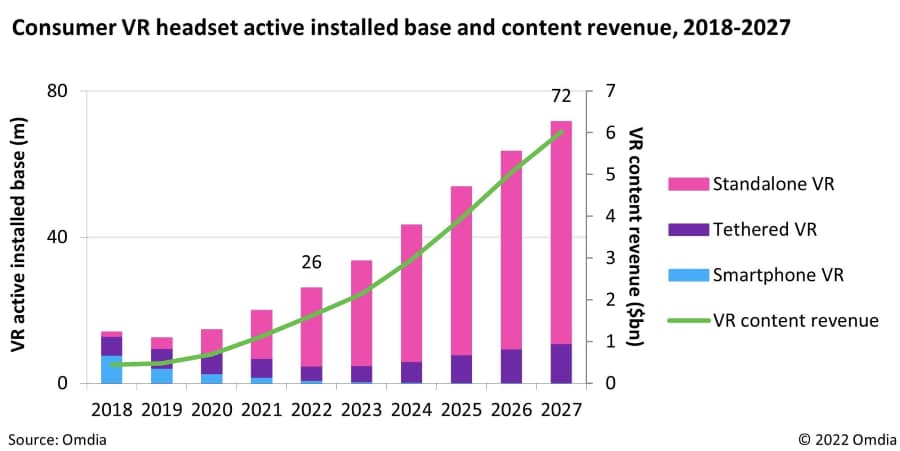

Virtual reality applications are expected to continue to grow in popularity and VR headset sales should grow accordingly.

Market research firms expect AR growth will track similarly, just a few years later. (Source: Omdia).

Sensors are fundamental for virtual experiences

VR is defined in part by the use of goggles, AR by the use of eyeglasses and headsets. The Metaverse will be defined by wearables, though AR gear will certainly be an intrinsic part of the experience.

As much as VR and AR are fundamentally visual experiences, motion and positioning sensors are intrinsic enablers. These sensors are critical for many reasons, among them monitoring where VR/AR/metaverse users are in real space to keep them safe; and tracking user movement to a) keep users safe in real space and b) to translate their motions into the virtual world.

The sensors available for detecting environment and fixing position include:

-

accelerometers for linear movement

accelerometers for linear movement

-

gyroscopes for rotational movement

gyroscopes for rotational movement

-

Tunnel magneto-resistance (TMR) magnetometers for high-performance directional sensing

Tunnel magneto-resistance (TMR) magnetometers for high-performance directional sensing

-

pressure sensors to detect vertical positioning / height

pressure sensors to detect vertical positioning / height

-

ultrasonic sensors for time of flight (ToF) detection, useful for ranging and object detection

ultrasonic sensors for time of flight (ToF) detection, useful for ranging and object detection

-

lidar/radar for object detection and identification

lidar/radar for object detection and identification

-

MEMS microphones for voice interfacing

MEMS microphones for voice interfacing

-

Body/ambient temperature sensors for sensing the environment

Body/ambient temperature sensors for sensing the environment

-

visual sensors for object detection and also for ToF

visual sensors for object detection and also for ToF

Three-axis accelerometers and 3-axis gyroscopes are combined to detect motion with six degrees of freedom (6DoF). Integrating a 3-axis magnetometer leads to 9DoF sensing.

All of these sensors have been used for motion sensing, positioning, and/or object detection in the automotive sector for many years, but not all of them are commonly used in VR/AR yet. ToF sensing, for example, is a newer capability; VR/AR application developers are adopting the technology for spatial positioning, however the potential extends beyond, and they are still learning how to take full advantage of the benefits. Several of the most popular smartphones now incorporate lidar for facial detection and it is easy to imagine that lidar might become useful in AR and metaverse applications.

For more information, see:

-

“These Technologies Help Speed the Adoption of Augmented-Reality Systems,” by TDK Invensense CTO Peter Hartwell (Electronic Design).

Learn More -

Introduction of TDK 6DoF MEMS sensor

Learn More -

MEMS ultrasonic sensor: Pushing the boundaries of AR/VR technology

Learn More -

Discover the broad portfolio of TDK piezo solutions

Learn More

Sensors for the metaverse

The metaverse will engage all five senses eventually.

Sight: Cameras have long been included in AR systems for all the same reasons they are in our smartphones. They will continue to be used in metaverse applications.

Sound: Voice recognition is already common with many IoT devices. Microphones are added to VR/AR/metaverse headsets and will be incorporated in wearables for all the same reasons.

Touch: The data from many of the same sensors used for motion detection, positioning, and object detection can be fed into haptic devices. These might be anything from bracelets to gloves to partial- and full body suits. TDK is developing haptic technologies that can convey not only that something is being touched and how firmly, but what the texture of that object is.

Smell and Taste: TDK currently is developing CO2 detectors that could provide warnings of potentially hazardous environmental conditions that most people could not detect on their own. Other sensors can be used to identify the presence – and sometimes even the quantity – of various chemical compounds. These sensors cannot be used to convey to users what a feast depicted in a video game might smell or taste like, but a selection of gas and chemical detectors might be able to tell us if a food item in the real world is unsafe to eat, or detect and identify a flower just by its fragrance.

The metaverse is apt to be modular and involve many different wearables that could be used in any combination depending on whatever metaverse apps each person chooses to use and how immersive they want their metaverse experiences to be.

Few VR/AR headset users realize how many different types of sensors are incorporated into the gear.

A new display for AR & VR

Displays are fundamental to both VR and AR. VR encloses LED displays (of one type or another) inside goggles. There are different approaches to building AR rigs, but they generally involve either mounting a tiny digital display somewhere in the user’s field of view or projecting digital content onto the headset’s lenses. The Metaverse experience will depend on AR rigs, used in conjunction with wearable technology.

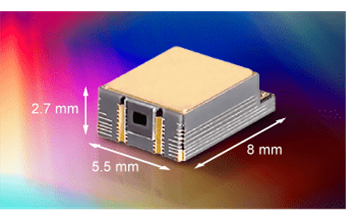

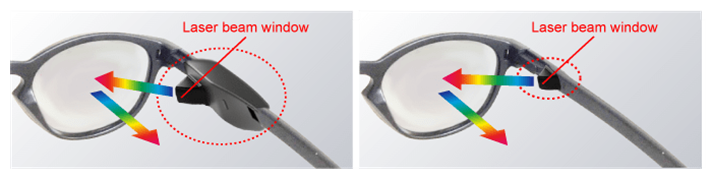

TDK recently introduced a new projection option. We have created a small, lightweight laser module that steers full-color digital imagery straight to users’ retinas.

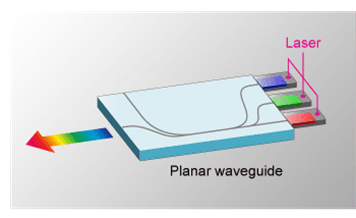

The new technology, which combines light emitted from laser elements through a planar path, can achieve a full-color display of approximately 16.2 million colors within an ultra-small laser module.

Current AR hardware is obtrusive, heavy, and unattractive. Our new tiny laser modules will make it possible to build both VR and AR headsets that are smaller and weigh significantly less.

An exciting additional benefit of our technique is that it produces crisp, clear images even for people who have imperfect vision.

For more information see:

-

Laser Module: A Game-Changer for AR

Learn More

Product image using a space-optics module.

Product image using the new Planar Waveguide module. Reduced module size contributes to overall decrease in size and weight of smart glasses.

Beams emitted from TDK’s tiny new ultralight laser module are steered onto the eye’s retina. Unlike how one sees real objects, images projected directly onto the retina will always be sharp, without the need

Sensor fusion and enabling software

Data from these different sensors must be processed and software that can integrate that data to produce progressively richer, more enjoyable, and safe experiences must be continuously refined.

One example of this kind of software enrichment applies to ultrasonic sensors. TDK SmartSonic technology adds intelligence to TDK’s ToF sensors through an integrated ultra-low-power SoC, providing system developers pre-processed data such as nearby target distance, presence detection, and more.

Data from different sensors can be compared to arrive at conclusions. If a VR system’s pressure sensor detects that the user’s headset remains at the same height while the 6DoF sensor detects the headset is tilting from 0 degrees to 45 degrees, the system can compare height data against tilt data and surmise the user is looking up but is not standing up, sitting down, or crouching.

Sensor fusion involves far more than simply comparing data; it involves combining the data from multiple different sensors to get more accurate results than could be achieved using any of the sensors on their own. TDK’s sensor modules for positioning don’t merely combine gyroscope, accelerometer, and magnetometer; the data from each is not merely compared but combined to deliver the most accurate possible data on pitch, yaw, roll, and linear direction.

Sensor fusion is far more complex and experience is a huge advantage. TDK has provided suites of sensors to the automotive and industrial automation markets, helped pioneer sensor fusion, and is bringing that expertise to bear on the VR/AR/metaverse market with their sensors, embedded processors, and software development skills.